Overfitting is a key characteristic for regression style data modeling with deep neural networks. Whereas considerable effort have been devoted to finding methods to reduce overfitting, little have been done to address the question how to measure overfitting. In most cases, overfitting is measured by prediction error with respect to a pre-selected test data set. Such method has led to various, more or less, successful overfitting-reducing techniques.

There are, however, some open questions relating to the rather ad hoc method to measure overfitting. Firstly, the method requires that the test set does not participate in the training process. But most learning process checks repeatedly with the test data set to find optimal models or meta-parameters. In this way, the test data set information "leaks" in to the training process and compromises the separation between training and testing data set. Secondly, there are no clear guidelines to choose the test data set. The method implicitly assumes that the randomly selected test data set will reflect the intrinsic statistics of data model, such assumption can be far from being true in the practice. Thirdly, many problem encountered in the practice have rather limited data, reserving part of the data as test data set might significantly reduce the size of the training data set, and therefore reduce accuracy of regression.model.

In this note I'll present a simple way to quantify overfitting of regression style neural networks. The main idea of the method is that it generates test data by linear interpolation, instead of choosing test data set from training data. The following diagram illustrated in this method:

The above diagram shows a neural network involved in learning a map from high dimensional data set to the 2-dimensional plane. After completed the learning process, the network maps the two data points labeled A and B to A' and B', respectively. In order to measure the overfitting, we choose 3 evenly distributed linear interpolation points between A and B; and calculate their targets in the 2 dimensional plane with the learned network. Whereas A, P1, P2, P3, B form a straight line, the points A', P'1, P'2, P'3, B' normally don't form a straight line. We then define the strip overfitting w.r.t. A and B by the size of the strip induced by the points A', P'1, P'2, P'3, B', as depicted in above diagram as the shadow area.

Based on the strip overfitting for a pair of data points, we can then estimate the overfitting for the whole training data set by the average strip overfitting for a fixed number of randomly selected data point pairs.

It should be noted that the number of interpolation points provides a way to control the scaling of the overfitting: the more interpolation points we choose, the finer the strip and the smaller the strip overfitting. When the target space is a one-dimensional space, the strip will collapses to a straight line and always have size zero. In order to accommodate such special case, we append an index component to each value in the series A', P'1, P'2, P'3, B', so that we get a series of two dimensional vectors (A',0), (P'1,1), (P'2,2), (P'3, 3), (B', 4); and we define its strip size as the strip overfitting for the one-dimensional target space.

It should be noted that the strip overfitting also becomes zero, if the mapping induced by the network preserves straight lines between the data points. That means, linear regression would be considered as kind of ideal regression with zero overfitting. Since most data models in the practice are non-linear, zero strip overfitting won't be achieved globally for all data point pairs.

The interpolation technique also provides a direct way to visualize data generalization. In order to see how a neural network generalizes, we can choose two data points and calculate a series of interpolation points between them; and then plot their trace mapped in the target space by the network. As samples, the following two pictures show the traces of three pairs produced by two differently trained neural networks: both networks have the same structure and are trained with the same algorithm, the only difference is that the network that produces the left picture has used the dropout regularization technique to reduce overfitting. We see clearly that the three interpolation traces on the left side are closer to straight lines. Numerical calculation also verifies that the network for the map on the left side has smaller strip overfitting. This example indicates that strip overfitting as defined in this note may be suitable to characterize overfitting phenomena.

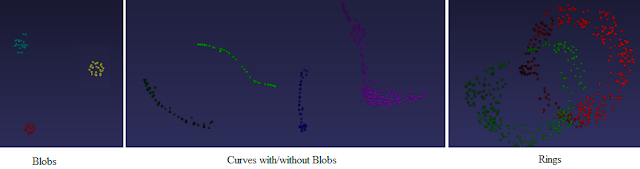

The next two pictures show more examples of interpolation traces that visualizes the effect of dropout regularization technique. These two pictures are produced by the same two networks used before with the exception that here more data point pairs have been chosen and the training data points are not shown for the sack of clarity. We see that the dropout regularization makes the interpolation traces smoother and more homogeneous.

Discussion

This note has introduced the concept strip overfitting to quantify overfitting in multidimensional regression models. The method basically quantifies overfitting by the "distance" between a regression model and the linear interpolation. We have demonstrated with examples that the strip overfitting may be suitable to measure and compare the effect of regularization techniques like dropout technique. The strip overfitting offers an interesting alternative tool to quantify and visualize overfitting in neural networks for multidimensional data modeling.

There are, however, some open questions relating to the rather ad hoc method to measure overfitting. Firstly, the method requires that the test set does not participate in the training process. But most learning process checks repeatedly with the test data set to find optimal models or meta-parameters. In this way, the test data set information "leaks" in to the training process and compromises the separation between training and testing data set. Secondly, there are no clear guidelines to choose the test data set. The method implicitly assumes that the randomly selected test data set will reflect the intrinsic statistics of data model, such assumption can be far from being true in the practice. Thirdly, many problem encountered in the practice have rather limited data, reserving part of the data as test data set might significantly reduce the size of the training data set, and therefore reduce accuracy of regression.model.

In this note I'll present a simple way to quantify overfitting of regression style neural networks. The main idea of the method is that it generates test data by linear interpolation, instead of choosing test data set from training data. The following diagram illustrated in this method:

Strip Overfitting with respect to point A and B.

The above diagram shows a neural network involved in learning a map from high dimensional data set to the 2-dimensional plane. After completed the learning process, the network maps the two data points labeled A and B to A' and B', respectively. In order to measure the overfitting, we choose 3 evenly distributed linear interpolation points between A and B; and calculate their targets in the 2 dimensional plane with the learned network. Whereas A, P1, P2, P3, B form a straight line, the points A', P'1, P'2, P'3, B' normally don't form a straight line. We then define the strip overfitting w.r.t. A and B by the size of the strip induced by the points A', P'1, P'2, P'3, B', as depicted in above diagram as the shadow area.

Based on the strip overfitting for a pair of data points, we can then estimate the overfitting for the whole training data set by the average strip overfitting for a fixed number of randomly selected data point pairs.

It should be noted that the number of interpolation points provides a way to control the scaling of the overfitting: the more interpolation points we choose, the finer the strip and the smaller the strip overfitting. When the target space is a one-dimensional space, the strip will collapses to a straight line and always have size zero. In order to accommodate such special case, we append an index component to each value in the series A', P'1, P'2, P'3, B', so that we get a series of two dimensional vectors (A',0), (P'1,1), (P'2,2), (P'3, 3), (B', 4); and we define its strip size as the strip overfitting for the one-dimensional target space.

It should be noted that the strip overfitting also becomes zero, if the mapping induced by the network preserves straight lines between the data points. That means, linear regression would be considered as kind of ideal regression with zero overfitting. Since most data models in the practice are non-linear, zero strip overfitting won't be achieved globally for all data point pairs.

The interpolation technique also provides a direct way to visualize data generalization. In order to see how a neural network generalizes, we can choose two data points and calculate a series of interpolation points between them; and then plot their trace mapped in the target space by the network. As samples, the following two pictures show the traces of three pairs produced by two differently trained neural networks: both networks have the same structure and are trained with the same algorithm, the only difference is that the network that produces the left picture has used the dropout regularization technique to reduce overfitting. We see clearly that the three interpolation traces on the left side are closer to straight lines. Numerical calculation also verifies that the network for the map on the left side has smaller strip overfitting. This example indicates that strip overfitting as defined in this note may be suitable to characterize overfitting phenomena.

The next two pictures show more examples of interpolation traces that visualizes the effect of dropout regularization technique. These two pictures are produced by the same two networks used before with the exception that here more data point pairs have been chosen and the training data points are not shown for the sack of clarity. We see that the dropout regularization makes the interpolation traces smoother and more homogeneous.

Discussion

This note has introduced the concept strip overfitting to quantify overfitting in multidimensional regression models. The method basically quantifies overfitting by the "distance" between a regression model and the linear interpolation. We have demonstrated with examples that the strip overfitting may be suitable to measure and compare the effect of regularization techniques like dropout technique. The strip overfitting offers an interesting alternative tool to quantify and visualize overfitting in neural networks for multidimensional data modeling.